AI Fundamentally Changes Cybersecurity Landscape

Regardless of whether AI applications are useful or even used at all

AI technologies are expected to fundamentally change our reality, for better or worse. There are a lot of promises, hopes, and fears regarding the scope or timeline of those changes. Most of all, though, there are a lot of bets that we are finally about to experience a long AI summer. The result is an unprecedented speed of AI development, compared to prior technical revolutions. AI applications move fast from ideas and experiments to production, as new capabilities get increasingly embedded in everyday life, despite frequent obstacles in their practical implementations. There are still many unknowns regarding technical limits, social and economic reactions, lessons from unavoidable failures, and other unintended consequences. The end state of this AI revolution cannot yet be predicted. There are use cases and scenarios where we have reasons to be optimistic (e.g., improving productivity and accuracy in radiology), but there will also be many serious disappointments. One thing we can say for sure is that AI technologies have already changed our cybersecurity landscape with far-reaching consequences. It doesn’t matter if AI applications are valuable – they are still connected with increased risks, and even if we choose to avoid AI, we still need to be ready for AI-supported attacks.

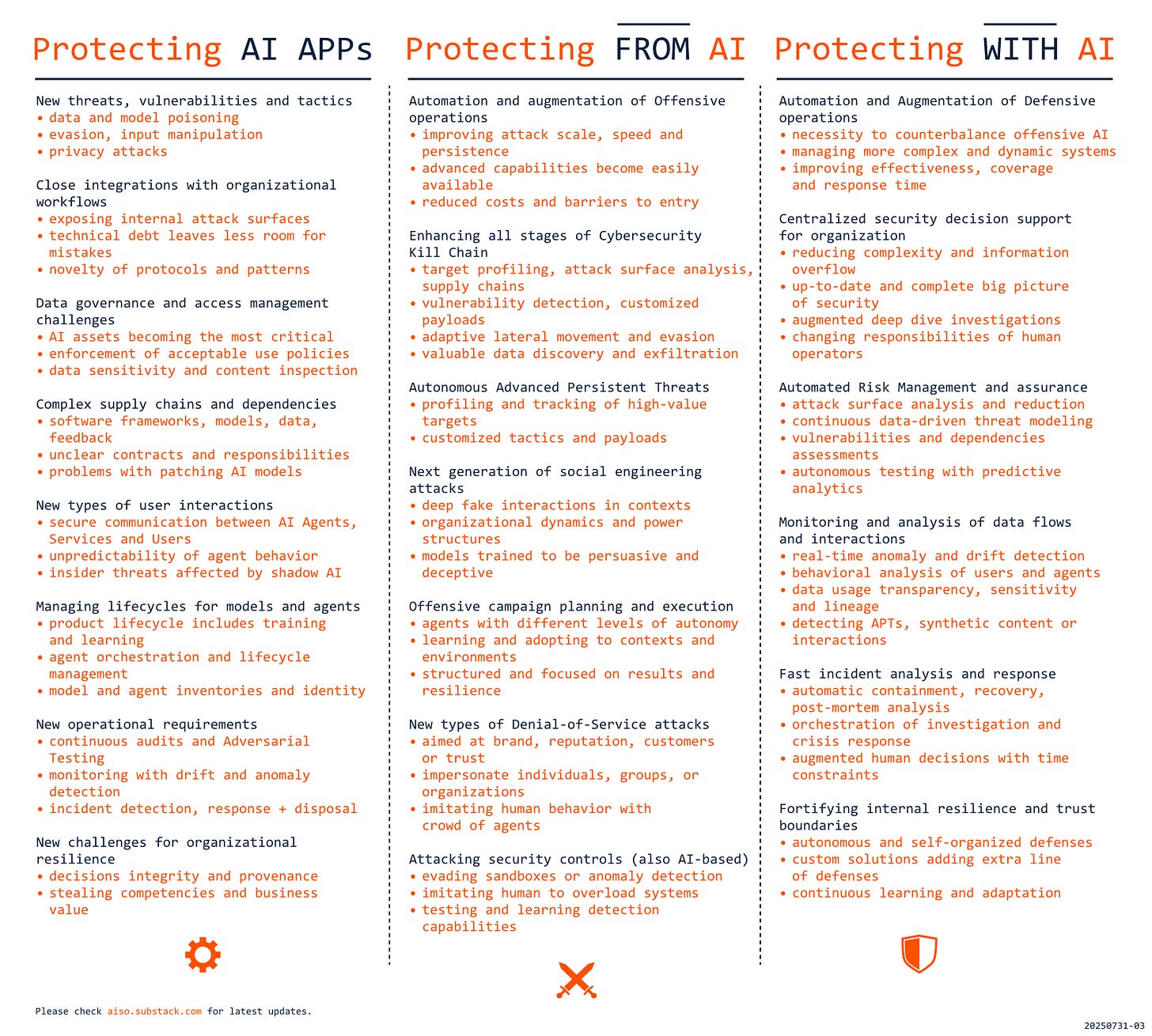

When it comes to the implementation of new technology in any non-trivial scenario, the security story usually follows the same pattern: NEW CAPABILITIES lead to NEW THREATS that require NEW MITIGATIONS. This pattern also applies to AI, but here things get more complex, as the term AI security can have different meanings depending on the context. The most common understanding usually means PROTECTING AI applications, as they face new threats for which we have gaps in controls and practices. On top of that, integrations with AI change internal attack surfaces, increase exposure to attackers, and can heavily stress existing security solutions. The second understanding is connected with PROTECTING FROM AI and attacks that are enhanced (old ones) or enabled (new ones) with AI capabilities. AI automation and augmentation can vastly improve coverage, speed, and success of offensive operations, lower the barriers of entry, and reduce the costs. Finally, AI security can refer to PROTECTING WITH AI, as applying new capabilities on the defense side becomes a necessity to deal with AI-supported offensive operations. But that also creates opportunities to gain more control and understanding of security posture and the effectiveness of defensive efforts. Each of these ways to approach AI security is connected with unique challenges and requirements, as presented in the diagram.

At first glance, many of the challenges above may seem to be theoretical, isolated, and with limited practical impact. It might seem that even though we don’t understand all the details and complete solutions are not there yet, we should be fine and have time to fill the gaps. Unfortunately, that is not the case given the speed of changes in AI space. The moment an organization or a user adds AI capabilities to their regular workflows, their exposure to attacks and security requirements starts to change rapidly.

It doesn’t matter if AI is successful or even useful to have a critical impact on security.

For AI solutions to be valuable, they must be connected with our existing individual or organizational workflows. That usually means close integration of AI components with existing local computing infrastructure or sending data to external systems. In both cases, the users and their data are exposed to new threats regardless of the practical value that is delivered (a strong reason for making informed decisions about AI integrations). In practice, it doesn’t matter if AI is successful or even useful to have a critical impact on security. AI components usually operate on sensitive data, often unstructured, which creates new challenges for their inspection, validation, or classification (and it’s easier to reveal sensitive information during conversation than in a query). Organizations are rarely ready for redirecting critical data flows to external black boxes, or for hosting autonomous and unpredictable agents in their infrastructure (e.g., by implementing a zero-trust model). Any technical debts, with security flaws taking months to patch, suddenly also become much more dangerous. On top of that, many of the AI technologies, protocols, and patterns are very new and haven’t been adequately tested yet. Model Context Protocol (MCP), one of the emerging standards for agent communication, was only released at the end of last November! With technologies so fresh, we cannot be surprised by any critical vulnerabilities (e.g., CVE-2025-49596, CVE-2025-6514). The challenges are also significant for individual users, as accessing advanced AI capabilities requires sharing data with external systems. Unsurprisingly, there are limited protections of personal data privacy and value in the context of AI, and user agreements even from major vendors do not provide sufficient clarity around data handling. Dependency on external companies with dynamic priorities, non-public practices, and not yet fully determined business models makes hosting local private models a much more attractive alternative.

Even if we choose not to use AI, we are vulnerable to attacks that are enabled or enhanced with AI.

It might seem that in such a situation, the reasonable solution would be just to stay away from AI and wait for others to learn the hard lesson and for the industry to mature. Unfortunately, such an approach would also be only a partial solution. The perceived need to enter the AI space has taken over most organizations operating in the digital space, and they want our data, regardless of the risks and challenges. It is still not rare that AI capabilities (and related access to data) are pushed on users without asking for consent or even informing them. But there is also another problem, as AI technologies can also be successfully applied in offensive and malicious operations aimed at organizations or individuals. Even if we choose not to use AI at the moment, we are still vulnerable to new attacks enabled by AI or old ones enhanced with AI. The impressive capabilities of Generative AI are game changers for social engineering and attacks aimed at human elements. AI-generated phishing operations are more effective and much cheaper, and campaigns using deepfake audio or voice cloning are no longer only research experiments. And this is only a beginning, as offensive agents can get into complex interactions with potential targets, effectively navigate social contexts, or create fake personas, with their life stories of photo vacations. LLM models can already be trained to be more persuasive than humans, also in deceptive scenarios, and unfortunately, such tactics are increasingly used against the most vulnerable groups, like seniors. The same capabilities can be applied in Advanced Persistent Threats, with profiling and tracking of potential targets, using their psychological vulnerabilities, all within organizational dynamics and power structures. They will also enable new types of Denial-of-Service campaigns aimed at brand, reputation, customers, or trust, with networks of agents imitating human behavior in online interactions. On top of that, agentic AI capabilities are expected to increase the speed of attacks 100x and are potentially applicable for automating or augmenting all stages of the Cybersecurity Kill Chain.

Like in many situations before, there is a security arms race between offensive and defensive applications of new technologies. Attackers usually have advantages over defenders in early stages, as they can more freely test and learn the latest capabilities, while defenses must be developed in structured and non-disruptive ways. The attackers also have a lot to gain. We should expect heavy investments into offensive capabilities from individuals, organizations, and states, and we will surely be surprised by the levels of their evil innovation. The barriers to entry are getting lower, open-source models are broadly available (with performance comparable to closed ones), and even models from major providers are tested for malicious purposes. On the other hand, the potential ROIs are significant, as users and groups highly vulnerable to fraud, as well as organizations struggling with their security, can become much easier targets for attackers who can use AI to implement advanced tactics, on a massive scale, and for cheap. The attacker might, unfortunately, be one of the most certain winners of the Generative AI revolution, at least in the short term. Security was never boring (not a good thing!), but with AI, it becomes even more interesting.

See: https://cybersecuritynews.com/man-in-the-prompt-attack/#google_vignette

thanks for sharing !