AI Security Layers

A structured approach to security of AI Applications

The tasks of developing a trustworthy AI application and verifying that it is responsibly used can be overwhelming as we are missing security controls and processes. Segmenting the target into layers can make the tasks doable. In this post, we cover a layered approach that can be applied to the security of AI applications.

To secure decisions automated or augmented with results from AI Applications, we need to understand the system as completely as possible. We should predict what can possibly go wrong, how the system could be attacked, in which situations it might fail, and what the consequences would be. That is rarely easy, as AI applications are always part of a bigger system and are more closely embedded in our social, economic, and political realities than traditional software. New AI applications are moving to production at an unprecedented pace, for which our existing security processes and controls are not ready. Security can be very costly when it is an afterthought. We need to find ways to keep up with these dynamic developments and, whenever possible, to overtake and proactively address risks before they are materialized. We need simplicity to focus on solving problems that are already here.

In cybersecurity, we cannot focus only on a single element of a system. We need to ensure that there are no gaps at any level, which requires proper steps during designing, implementing, testing, and operating a system. Similarly, in the case of AI security, we must focus on more than just the AI model, even though this is where we should expect most of the new threats. We need end-to-end security analysis to identify all elements and properties that could be used in an attack or lead to unintended consequences. That is required to understand the threats that AI applications will have to face, determine the requirements that need to be met, evaluate controls that should be implemented, identify gaps in our toolsets, and document limitations and constraints. This helps us answer the key question: is a particular AI application suitable to help us with specific decisions and their consequences?

Because Ogres are like onion

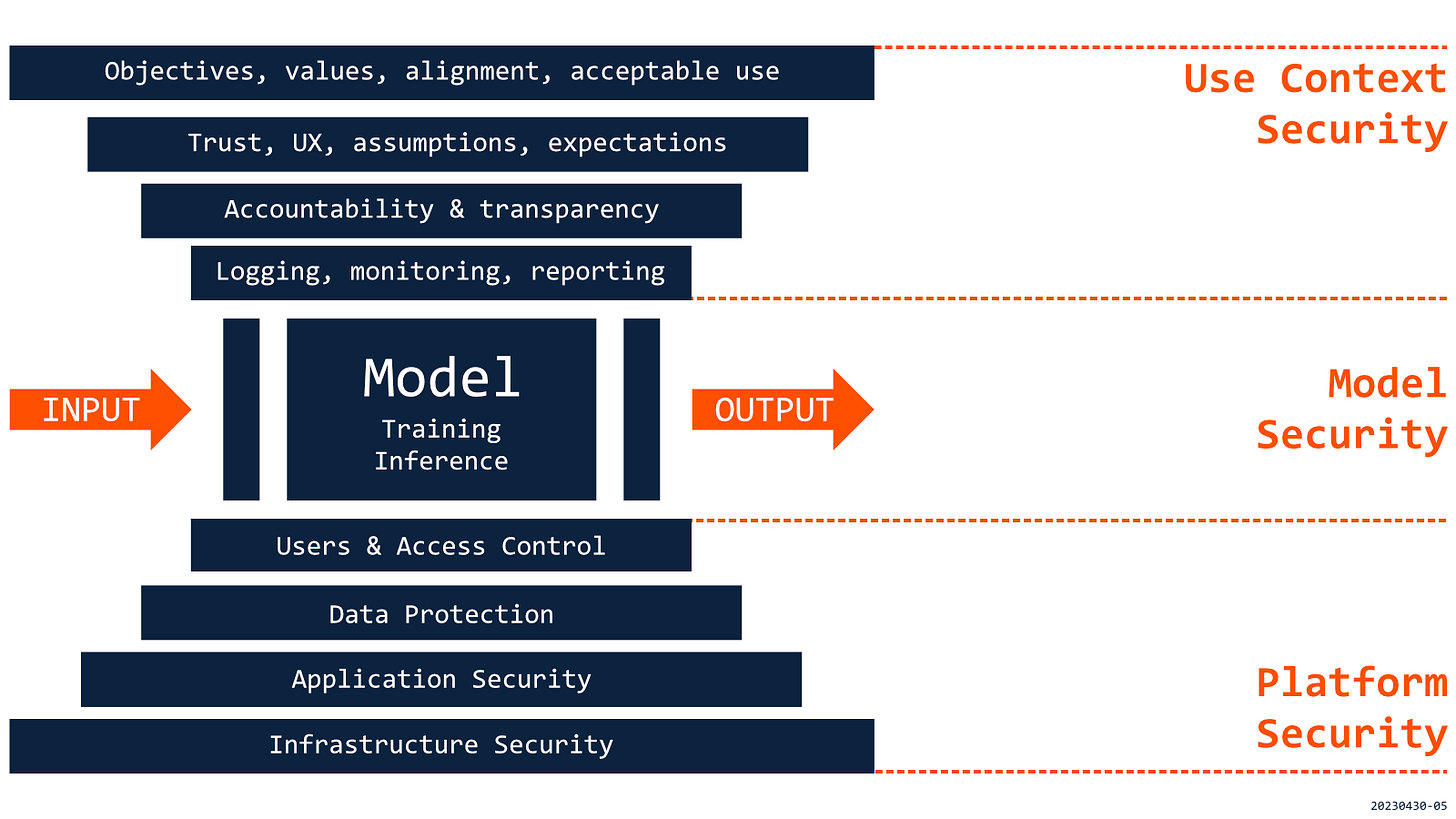

The proposed structure is focused on simplicity and includes three layers: USE CONTEXT, MODEL, and PLATFORM. Such a structure should allow us to look at the system holistically and better understand its attack surface. The layered approach will also help us to separate areas that are more familiar from those that are new, identify where the research is needed, and prioritize better activities that are unique for each layer. Most of all, however, this structure will be helpful for a better understanding of relations and dependencies between different layers and for managing vertical integration of security and safety efforts. Building and operating AI applications requires various participants (individuals and teams) who likely have limited visibility into activities outside their scope (e.g., business user vs. data scientist vs. site engineer). A layered approach can facilitate communication and cooperation between all stakeholders that need to be involved in security efforts.

Please note that this structure is not intended for any specific type of application, underlying technology or development stage. It should be rather applicable to various models/algorithms, architectures, and use scenarios throughout their lifecycles.

Use Context is the key

The USE CONTEXT should include all elements relevant to a decision or sequence of decisions that are automated or augmented with help from an AI system. The specific elements vary between scenarios, as there are significant differences in the USE CONTEXT of a system that generates a logo, is expected to drive a car, or should assist a physician in a medical diagnosis.

Some elements, however, should be applicable to most cases:

Scope and goals – also non-goals, severity and complexity of decisions/tasks, boundaries of intended use (vs. risk for misuse/abuse), expectations, and assumptions about the results from AI.

Domain and environment – situational requirements (e.g., time constraints), dependencies and integrations (inputs/outputs), domain-specific rules and regulations (e.g., copyrights).

Users and experiences – different roles, users vs. subjects (as impacted, not always intentionally), clear accountabilities and responsibilities, UX patterns, and changing nature of interactions with AI.

Impact and consequences – in terms of value and harm, also unintended, for individuals or groups, but also in broader social, economic, or political scope (e.g., well research problems of bias and discrimination).

Even though most controls and security processes might be implemented at the MODEL and PLATFORM layers, the discussion about the security of AI applications needs to start with the USE CONTEXT. Without that, we will not be able to determine the scope of required security efforts, and we will not know about gaps that should be addressed or even if implementing a specific AI application is acceptable (due to technology or other reasons).

Model is new, shiny, and unknown

AI models are different types of software from a security point, with new attack surfaces and new techniques that can be used to attack them. Traditional security mitigations, like input validation, still need to be implemented but will not be effective against attacks aimed at disrupting decision processes based on results from AI with valid but adversarial input. For most applications, it comes to questions about trust: how much do we trust input (e.g., for training), how much do we trust the process (to be robust and resilient), and how much do we trust the results (are hallucinations acceptable)?

The practical attacks against the AI models can be mapped to key security properties:

Confidentiality: privacy attacks aimed at compromising model (model extraction) or data used during training or tuning (model inversion, membership inference).

Integrity: attacks aimed at influencing the results of a model, with data poisoning or backdooring during the training stage or evasion attempts during inference.

Availability: attacks aimed at degrading the quality of results or systems’ response time with severe consequences in a high-risk domain or mission-critical scenarios.

A robust system is expected to function as intended under adverse conditions. What is intended depends on the context of the model and its intended usage. The same model can be suitable for some applications and completely unacceptable for others. We need to know if that happens.

Platform seems familiar (mostly)

AI applications require significant computing resources that must be secured regardless of whether we use cloud, on-prem, hybrid or edge configurations. In addition, the PLATFORM needs to meet specific requirements related to USE CONTEXT, the MODEL, and related technologies.

Looking at some challenges in different dimensions:

Infrastructure – multiple trust relationships (data, results, services), AI models as critical components, and different integration patterns with external solutions.

Software – strong dependencies on frequently updated open-source software, problems with supply chain attacks, including new challenges related to pre-trained models.

Data – operating on a significant amount of data, often sensitive, requires effective data governance and protection, especially with external integrations.

Users – multiple roles requiring different levels of access to data and systems, need for UX that secures and enables shared research and experimentation with new models.

Many requirements identified at USE CONTEXT and MODEL Layers need to be implemented at the PLATFORM Layer, including integrated and comprehensive monitoring and incident response, as this is the best place to detect all types of problems. This layer may seem the most familiar and, therefore, less important. Still, it should be clear that AI applications cannot be protected if there are gaps or vulnerabilities in underlying technologies – these are system elements that will still be attacked in the first place. We cannot forget that we can build trustworthy AI applications only on solid foundations.

The presented layered approach should make the tasks of securing AI applications not just doable but manageable. This structure can help us build a complete picture of the system that connects the use of AI applications, specific AI models or algorithms, and underlying technology platforms. That picture can be used as the model part for threat modeling of the whole system, and then diving into specific layers to determine controls that should be implemented, but also gaps for which mitigations are yet to be invented. We should start with analyzing the USE CONTEXT of AI applications to identify requirements for all three layers. Evaluation of these requirements in reference to implemented or available controls and processes should lead to a better understanding of limitations, constraints, and guidelines, including clarity if using a solution in a particular context is acceptable. In the end, we should have a better understanding of the benefits of a new application but also of related risks, including those that still require our attention.

We'll discuss this in the next post.

Updated on May 3rd, 2023, with minor fixes based on received feedback (thank you!)