The security of AI applications depends on efforts by multiple teams, executed with different goals, usually in unrelated contexts. These efforts need to be closely coordinated and integrated to ensure that an AI application can be considered as secure and safe. This post discusses how the layered approach can help in these tasks.

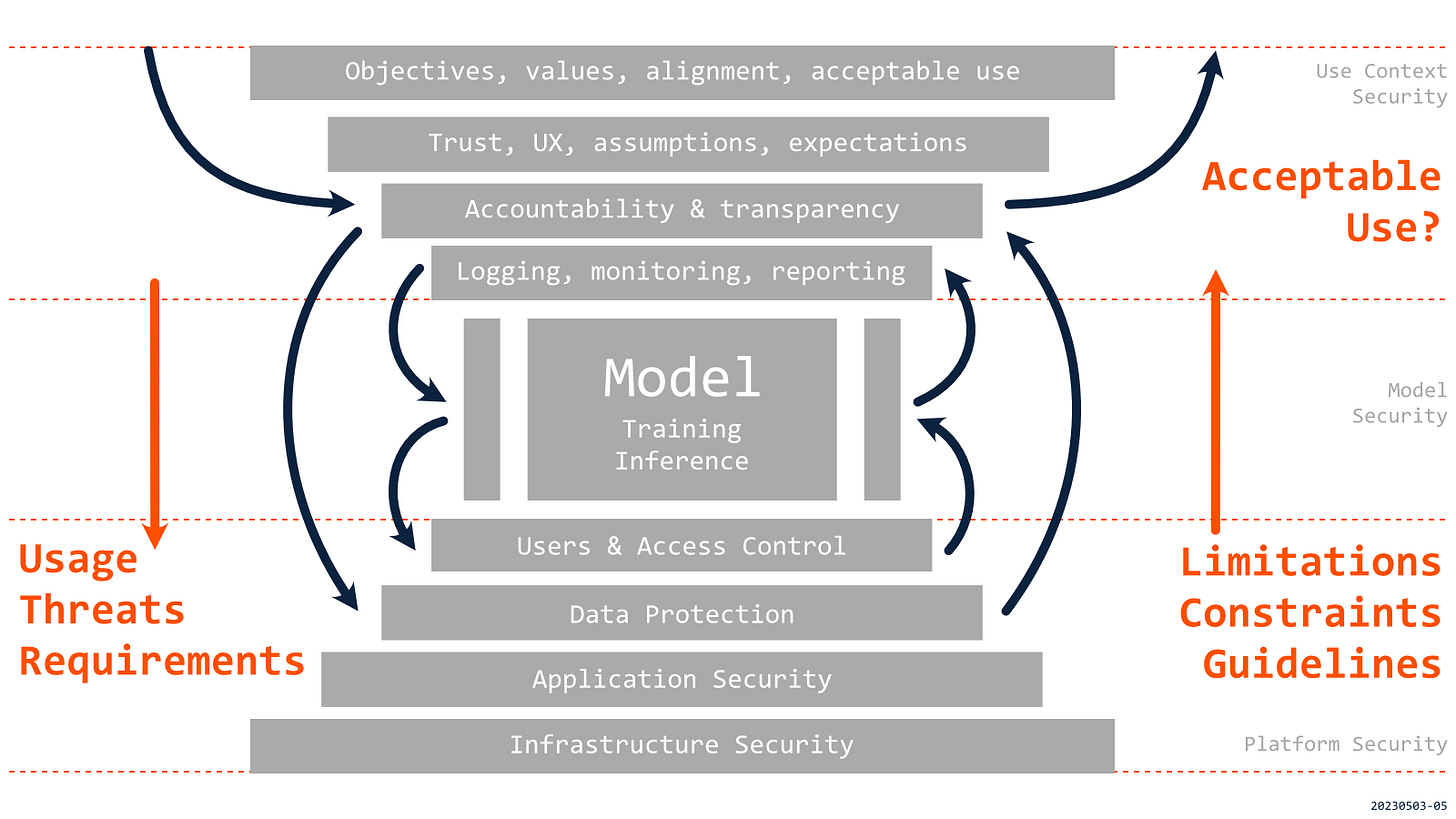

This post is a continuation of the previous one that introduced the approach to the security of AI applications as complex systems with distinguished USE CONTEXT, MODEL, and PLATFORM layers. That layered approach can provide a structure for a better understanding of the characteristics and relationships between different parts of the system. However, this structure is only the first step, as we need to ensure efforts focused on various elements are appropriately coordinated and integrated. The development of AI applications includes multiple stakeholders, including business users, data scientists, and platform engineers. Different teams operated with their own goals and priorities, often with limited or informal communication. The layered approach to AI security can help to facilitate these interactions and to clarify responsibilities and accountabilities at different layers. In practical scenarios, such close and complete cooperation is required to understand all requirements, limitations, and constraints and determine if using an AI application in a particular context is acceptable.

The high-level process of using the layered structure for practical analysis and implementation of security control may be divided into three stages:

Start with an analysis of USE CONTEXT, as most requirements for an AI application are determined at this layer. We need to understand the intended use, possible impacts, and threats that AI applications will likely face in order to determine practical requirements and controls for the whole system.

Perform evaluation of security controls and processes at all layers (including USE CONTEXT) against the complete set of requirements. The analysis should cover controls already in place but also those that could be implemented or should be researched to mitigate new threats or identified gaps.

Define, document, and communicate constraints, restrictions & guidelines for using AI applications in a specific context. The documentation should include inherent and residual risks and be used to drive the adoption of the solution, including clarity if a particular application is acceptable based on technical and non-technical criteria.

Top-down analysis of REQUIREMENTS

Most technical requirements for AI applications will depend on their USE CONTEXT, and this is where security analysis needs to start. The same AI model can be suitable for some applications but unacceptable for other scenarios. We need to understand what is at stake, the boundaries of intended/unintended use (how are they enforced?), who are the users and subjects, and how they can be impacted by results from AI (how should we measure that?).

This stage's primary goal is identifying all requirements that need to be met for the responsible use of an AI solution in a specific context and defining the conditions for us to consider a solution trustworthy. Those requirements can be related to the PLATFORM and proper access control to sensitive data, to the properties of the MODEL and monitoring of input, output, and usage, or the USE CONTEXT and appropriate design of user experience or service agreement. The requirements must be meticulously documented (especially the challenging ones!) and updated when there are changes in USE CONTEXT. That documentation, when combined with results of evaluations of controls at other layers, should be the foundation for decisions if the risk of using an AI application in a specific context is acceptable.

Evaluation of CONTROLS at each Layer

In the second stage, high-level requirements from the analysis of USE CONTEXT are translated into technical requirements for building, operating, and using a model. In this stage, we look at controls and guardrails implementing those controls to evaluate if they meet all the requirements. During that process, we don’t only look at controls that are already in place but also check those which are available and could be added to the system.

Given the variety and novelty of AI applications, we may likely identify threats, requirements, or even scenarios for which existing security controls are missing or insufficient (enterprise uses of LLMs are a timely example). Such gaps point to opportunities for research and development but also identify current limitations for using an AI application in a given context for which technologies may not yet be ready. Those limitations can be crucial, especially in high-risk domains or mission-critical scenarios, for example, in healthcare, where we may have specific requirements around the explainability of a model and interpretability of results. Again, in this case, it is of utmost importance that all such findings are documented so they cannot be missed or ignored when deciding to use an AI application in a specific context.

LIMITATIONS, CONSTRAINTS and GUIDELINES

Based on requirements and confirmed limitations in implemented or available controls, we can better understand the risks related to analyzed AI application. This allows us to define constraints that would reduce risks, for example, by prohibiting the use of a solution for high-risk decision-making (PDF). Eventually, those constraints can become restrictions related to domain-specific regulation or a certification process. But similarly significant are user-friendly and practical guidelines that are clearly communicated to all potential users of an AI application. For example, the guidelines could identify the need for direct human oversight or validation and approval of results. Both limitations and guidelines should be acknowledged, accepted, and recorded. Unfortunately, relying just on user agreements will likely be insufficient, for example, in the case of intentionally malicious use of AI.

The applications where AI technologies bring the brightest promises are often related also to the most significant risks and unknowns. For most non-trivial AI applications with potentially game-changing impacts (again, depending on USE CONTEXT), we eventually need to decide if risks related to using the model and platform in a specific context are acceptable or if more research and development are necessary before we can move forward. Combined learnings from analysis of threats, use requirements, implemented and available controls, limitations, constraints, regulations, and guidelines should make such decisions more informed and responsible. That said, there are scenarios where we should have all the required information to make a call about technology’s readiness already at the USE CONTEXT layer, even without looking at the details of MODEL or PLATFORM (e.g., attempts of quickly adding LLMs to healthcare).

The layered approach supported by well-designed processes could be helpful for the vertical integration of security efforts executed by different teams and stakeholders involved in bringing AI capabilities to real-life applications. Many scenarios where we hope AI will deliver significant value are associated with risks that are not yet well understood and, therefore, cannot be mitigated unless we’re lucky (not a great strategy for security). We need to understand what requirements are defined by our usage scenarios, the limitations of current technologies, and constraints and guidelines for secure and safe implementation. That requires effective communication, clear accountabilities, transparency, and up-to-date documentation about all identified vulnerabilities, gaps, or concerns so they are not lost in translation. Without that, we cannot make an informed call if an AI application is ready for automation or augmentation of specific decisions that we’d hope technology will help us with.

Updated on May 4th, 2023, with changes to opening and closing sections.