Generative AI, Security and Trust

Do we have a good enough understanding of benefits, threats, and requirements?

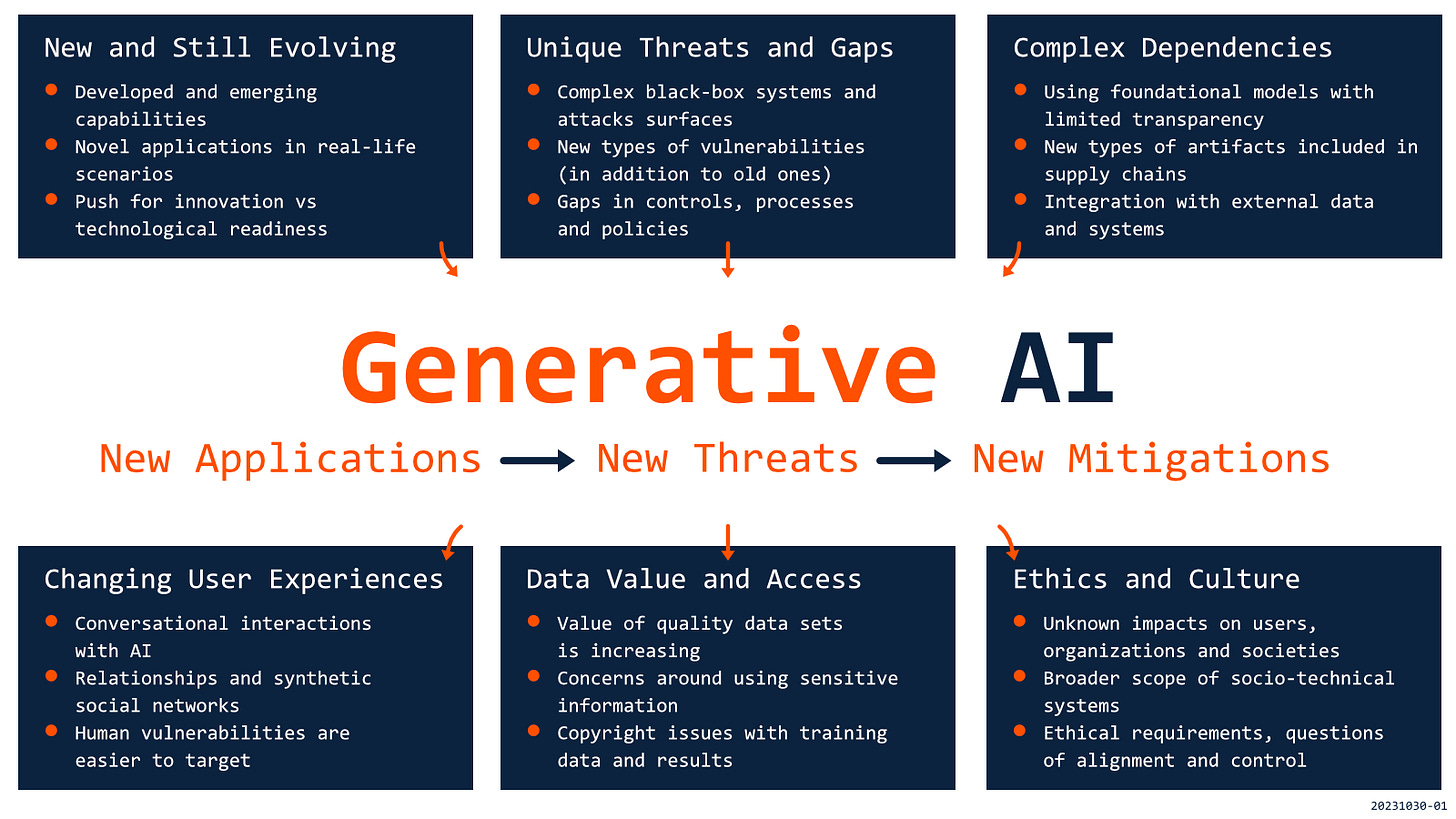

There are high hopes for applications of Generative AI, even though we don’t yet fully recognize the benefits or threats that must be mitigated. Generative AI is different even from other types of AI. These applications are still very new, with unsolved problems, complex technical and business dependencies, and likely to change human-computer interaction and redefine value of data. We need to pay close attention to which scenarios these solutions are ready to be used and what could be their broader impact on our digital reality.

The recent understanding of AI has been shaped by Generative AI and capabilities to create content that, until recently, was unique for humans. Generative models effectively produce text, code, images, videos, 3D models, or music. Large Language Models (like GPT-4, PaLM, or Claude) can summarize, translate, explain, and transform text or get into long conversations with users. Image generators (e.g., Stable Diffusion, Midjourney, or DALL·E) can produce photorealistic or artistic-like results based on descriptive input. Generative AI is finding practical applications in various domains and scenarios. On one side, there are concerns and resistance, but in the background, AI is still making its way inside. The AI Race is happening with unprecedented progress and high expectations but also with limited transparency, confusing dependencies, and unclear accountabilities. We don’t yet fully understand the value, risks, and possible impacts of Generative AI. In organizational contexts, there are reasonable questions about whether applications of Generative AI can be trusted. In the bigger picture, we also need to consider how capabilities of delivering results that can be indistinguishable from human output will more broadly affect trust in our digital reality.

Generative AI capabilities cover a broader space of cognitive, creative, or communication tasks, and their results will become inputs to our decision processes.

The promises and hopes for Generative AI applications are to fundamentally change how we work with information and interact with software. These technologies are expected to reduce costs, improve productivity, and automate mundane tasks - they are even described as the next productivity frontier. Even though there are great examples of successful use cases for Generative AI, companies struggle to deploy these solutions. Regardless of success in particular scenarios, these applications will inevitably change how we make decisions in individual, organizational, or global contexts. Automation and augmentation of decision-making have been mostly associated with Discriminative AI and data-focused tasks like prediction or classification (they are still the most common in enterprise contexts). New generative capabilities cover a broader space of cognitive, creative, or communication tasks, and their results will become inputs to our decision processes. We can make a different decision if we use a summary produced by AI instead of reading a paper; similarly, an interaction with a customer may have a different outcome if we delegate it to an artificial agent. The risks depend on a particular use context and detailed integration of AI with our information systems and decision workflows, but we should start with the recognition that these solutions differ from traditional software and other types of AI.

Generative AI is new and a work in progress. The capabilities are still evolving, and some are surprising and not necessarily well understood. There is a significant push for finding new applications for these capabilities, which means they can also be abused or misused in innovative ways. We don’t fully understand the threats landscape or technical limitations for Generative AI, but we already know about gaps in our controls and processes. Solutions for identified problems are developed, but they will take some time to mature, and some solutions will not be easy to find. The same applies to regulations under development, missing standards, metrics, or best practices related to effective usage policies, penetration testing, or independent certification. We are dealing with a moving target, where not only technology is changing, but also users’ behaviors and expectations, which might solve some problems and create brand new ones.

There are new threats with missing mitigations. AI models are different from regular software, and Generative models are different from other types of AI. They are more complex, black-box, and often developed with limited transparency due to technical or business reasons. There are new threats related to AI technologies, and some are specific to Generative AI (PDF), like prompt injections in the case of LLMs. These solutions, by design, accept and produce complex unstructured data, and increasing the number of supported data types will also affect attack surfaces and introduce new types of vulnerabilities. When ChatGPT started to accept images as part of conversations, that established a whole new set of vectors for prompt injection attacks. All classical security requirements related to Confidentiality, Integrity, and Availability still apply to Generative AI. They can be even more difficult to meet because of the complexity and novelty of these applications.

Technical and business dependencies are complex and unclear. Generative AI solutions are rarely built from scratch, partially due to the costs and difficulty of such efforts but also because plenty of foundation models (pre-trained) can be used or customized (i.e., fine-tuned). Unfortunately, most state-of-the-art models do not share much information about data sources, limitations, or evaluation and testing (as covered in requirements of the Draft EU AI Act). That creates business dependencies with unknown variables, especially in more advanced configurations, when a third party develops a specialized solution based on foundation models. On the technical level, supply chains have become more difficult as they need to also include data or models. The complexity increases even more when AI solution is integrated with external data sources (e.g., leading to indirect prompt injection) or when these solutions are deployed to operate in physical space.

The nature of human-computer interactions is fundamentally changing. One of the most interesting consequences of LLMs is moving from discreet interactions with software to more continuous conversations. That will change how we perform many tasks (e.g., searching for information) and go further as interfaces become multimodal – for example, by using voice interactions with ChatGPT. The relationships will get even more complicated as artificial agents will be equipped with personalities (e.g., celebrity-based from Meta), and more emotional components will be added. We should be concerned about that trend heading towards (even partially) synthetic social networks, especially when developed without transparency and regulations. From a security POV, such rich interactions may lead to new types of attacks aimed at humans, often the weakest links of security systems. Harm to vulnerable individuals may also result from irresponsible use of technology (safety issues that could become security).

Generative AI will redefine value and access to data. Data is essential for training and improving models, and continuous access is needed for many scenarios. Quality data sets suddenly become much more valuable. With Generative AI, legitimate concerns regarding inputs (e.g., data leaking or collecting) and outputs (e.g., quality of results) lead some companies and agencies to ban or restrict the use of Generative AI. Many promising applications need access to sensitive data (e.g., emails or internal documents) to perform their actions. These implementations require a proper reviewing process, data governance maturity at the organizational level, and clarity about the business value of data. Practical applications of Generative AI are also limited by open questions about copyrights, both for data used in training models and data produced by or with help from AI. This problem is unlikely to be resolved by providers offering legal protection for AI copyright infringement challenges.

It’s not only technology but also ethics, culture, and communication. The impact of Generative AI goes beyond technology, and security efforts cannot be limited to engineering solutions. Specific consequences depend on integration use context or quality and usage of results, and users can be affected even if they don’t choose to use AI (e.g., by being manipulated with help from AI). We need to extend security efforts, like threat modeling, to bigger socio-technical systems to capture all the assets that could be targeted and build appropriate guardrails emphasizing transparency and accountability. On top of that, we need to address additional challenges related to AI safety (no adversaries), ethical considerations (e.g., fairness and dealing with bias), or value alignment (not only technical but also concerning control over models). Even though these elements may not seem directly related to AI security, they may result in AI systems being vulnerable and new ways of causing harm.

When it's easy and cheap to produce a mass amount of human-like content, we cannot expect its value and utility to be perceived in the same way.

With Generative AI, we still operate in uncharted territories regarding benefits, and risks. In one significant aspect, Generative AI differs from other types of AI solutions focused on predictive analytics, classification, or pattern recognition. These new solutions can impact global trust in digital space and change not only how we trust technology but also how we trust each other. The quality of AI-generated content will challenge what we see and believe, how we trust individuals, organizations, or political systems, and how we share and value the results of human work. Generative AI impact may be especially significant when combined with social media - if social media made it cheaper and easier to spread disinformation, now generative AI will make it easier to produce. Also, to be clear, our challenges are related to malicious and non-malicious usage of Generative AI. It's not only about the practical use of deep fakes but also about massive information overload of artificially generated content that seems legitimate. We will have to learn how to deal with it. When it's easy and cheap to produce a mass amount of human-like content, we cannot expect its value and utility to be perceived in the same way. If a long email is generated by AI based on a single sentence, the recipients will quickly start to use solutions to summarize these emails and extract the original message (not necessarily increasing productivity along the way). In such environments, it will be easier to attack individuals and take advantage of personal vulnerabilities, also with the help of automated social engineering.

New technologies are rarely dangerous on their own – most harm results from how we decide to use them. With all the excitement around Generative AI, there might be a perceived necessity to adopt new solutions quickly and boldly, and being the first to implement new AI solutions would lead to a significant competitive advantage. Combined with challenges in making informed decisions about values and risks, that can easily result in focusing on benefits while disregarding unsolved problems, taking shortcuts, and creating applications without a complete understanding of their impact. That might work in many cases, but such a strategy has inherent risks. We will see successful use cases as well as spectacular failures with applications of Generative AI. Critical mistakes could be rare, but their costs for organizations might be higher than actual benefits (especially when they are still unknown). AI Race is undoubtedly happening but finishing it might be more important than being first. When it comes to long-term applications, Generative AI may be one of these cases, when slowly is the fastest way to get to where you want to be.

Updated on Nov 6th, 2023, based on received feedback. Discussion about “accountability and shared responsibility” was moved to the next post, and a diagram was added.

I don't think we do and I don't think we can predict the future. How a few use the capability will shape and model the future.