Security of AI Use Context

We need to start with understanding the ways we use AI

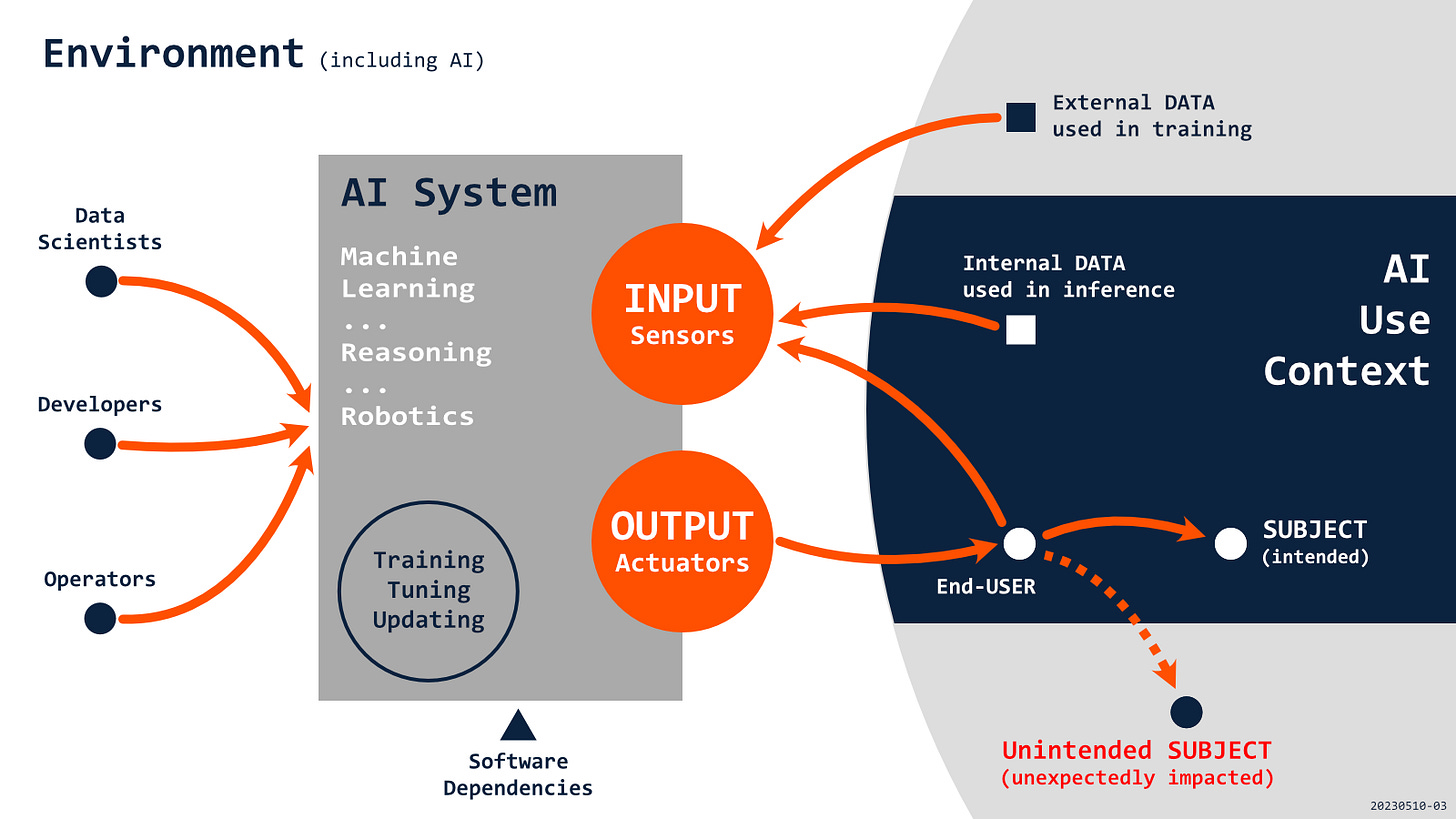

Security of an AI application should start with understanding its intended and actual use, the scope of impact, and possible consequences of failures. Threats and requirements for AI applications are critically dependent on how we use these technologies. In this post, we will look at more details of the USE CONTEXT in the big picture of AI Security.

In earlier posts, we discussed a 3-layered approach to the security of AI applications with USE CONTEXT identified as the top layer in addition to MODEL and PLATFORM. We also looked at integrating efforts across these layers to determine requirements, evaluate controls, understand constraints, and decide if using an AI application is acceptable. USE CONTEXT plays a unique role in these processes, as our analysis in that scope determines many requirements for the whole system (including all underlying technologies). At this layer, we also have to decide if risks related to using an AI application in a specific context are acceptable based on technical and non-technical criteria. This layer effectively connects a vision for an application and related technologies with reality where the solution would be implemented, and consequences would be felt. Security and safety of any AI application essentially start with an understanding of its USE CONTEXT.

AI as a Socio-technical System

AI USE CONTEXT includes all elements, actors, and aspects of the environment that are relevant or can be affected by decisions or tasks that we try to automate or augment with help from AI. Given the variety of new applications and growing technical capabilities, creating a definition of a context for all emerging scenarios can be tricky (since it can be, well, contextual). We are dealing with complex systems that we don’t fully understand. There are differences in USE CONTEXTs for systems that generate a logo, are expected to drive a car, or should assist a physician in a medical diagnosis. Even in the scope of the same application, we might talk about multiple contexts depending on the point of view. Different elements will be relevant for an individual who is enabled by AI capabilities, a startup trying to build a business by automating specific tasks, and employees whose jobs get automated.

That said, with AI applications, we still have a responsibility to identify core elements and aspects of USE CONTEXT that can relate to all possible negative effects of the technology, assuming worst-case scenarios. We need to determine what can go wrong, under what circumstances harm could be caused, and who might be affected. When an AI application is used in an environment (and is usually also a part of it), its impact can be broad and often unexpected. In all non-trivial scenarios, we should look at AI applications as socio-technical systems that include technology, environment, and users but can also have unintended impacts in the individual, business, social, or global scope.

Even though USE CONTEXTs for AI applications can be very different, there are shared elements and aspects that should apply to most applications and help us determine boundaries of intended use and risks of misuse or abuse.

Decisions & Tasks We need to start with understanding the details of decisions and tasks made or supported by AI applications, also indirectly (e.g., by a downstream decision maker who never interacts with AI). That includes specifying how an AI application will be used in our decision workflows, with clear goals and non-goals separating intended and unintended use. We need to understand the severity and complexity of decisions and tasks (what is at stake?) or expectations and assumptions about results delivered by AI (will they be trusted or validated?). Clarity in this scope is essential for defining requirements but also for more specific efforts focused on values/objectives alignment. We need to be able to monitor and detect differences between systems AI intended and actual uses and behaviors of AI systems, as they may change over time or with the development of the underlying model.

Domain & Environment Our goals must be considered in the context of domains and environments where AI applications are expected to operate. Application domains are associated with specific best practices, rules, and regulations; some may be identified as high-risk by default or include scenarios that require special attention (e.g., healthcare or transportation). Environments, digital and physical, also have their unique characteristics, with situational requirements for decision situations (e.g., time constraints or stressful conditions) and scenarios that are mission-critical. We must understand how an AI system fits the environment and integrates with individual, business, or global decision workflows. That brings us closer to technical requirements as we look at input/output data (sensitive or proprietary?), deployment patterns, dependencies, trust, and assumptions related to external models, data, or services.

Users & Subjects Multiple actors are usually involved with AI applications, from data scientists and developers to engineers operating it, to actual system end-users. We need clarity of accountabilities and responsibilities, as many of these stakeholders are included only at specific stages and may have limited visibility into the complete lifecycle of the system. In addition to users of AI systems, we also have subjects, including all individuals and groups affected by results from AI applications (e.g., recipients of benefits granted or rejected by AI). In some cases, subjects may not be obvious, as they are impacted indirectly (e.g., artists and generative AI), or potentially everybody could be included just by being part of the environment (e.g., public use of facial recognition). These considerations become especially important with changing nature of our interactions with AI, as we move from queries and discreet decisions to conversations and continuous cooperation with AI, which could create dependencies not only at the cognitive but also at an emotional level.

Impact and Consequences AI applications can benefit or harm users and subjects but also have broader social, economic, or political consequences. Negative impacts can be caused by malicious use of AI, but they could also result from ignorance, negligence, or not looking beyond the narrow design of decisions/task scenarios during development. Given the complexity and novelty of AI applications, we should assume that unexpected failures, emergent capabilities, or surprising use of the system will happen. We need to be prepared to handle such cases based on their severity and urgency. Some problems can be anticipated by proper analysis (like threat modeling), but others require monitoring and detecting unintended consequences and impact behind intended USE CONTEXT. That applies not only to security and safety and becomes the most visible with challenges related to systemic and human bias and different forms of algorithmic discrimination detected in practical scenarios.

Security depends on Use Context

All these elements and aspects of USE CONTEXT can be relevant for identifying threats that an AI application will be exposed to, defining technical requirements, and using them to evaluate implemented or available controls. That should allow us to better document and measure the risks related to an AI application, both inherent and residual – after considering all controls, constraints, and guidelines. That analysis is needed to help us make a call if the use of an AI application is acceptable in the specific context given the current state of technology, but also non-technical criteria. Some risks may be unacceptable regardless of results from technical analysis (i.e., we don’t even need to look at MODEL and PLATFORM), for example, scenarios posing a clear threat to the safety, livelihoods and rights of people that will require strong regulation.

Identification of relevant non-technical elements and aspects of USE CONTEXT may be challenging due to the increasing complexity, multiple dependencies, and trust boundaries that we only start to recognize. Since we are talking about new systems that are still under development but already are applied in a broad range of applications, we need to pay close attention to any significant changes. The USE CONTEXTs of these applications can be very dynamic and quickly evolve because of improving results, changing scenarios, or new users’ behaviors. A model can be suitable only for a specific context (and unacceptable for others), but it may not even meet the requirements of the same application after a while. For those reasons, the analysis of USE CONTEXT cannot be a one-time project but a continuous and responsive process of documenting, monitoring and analyzing the use of AI applications that takes into consideration both internal and external changes (e.g., in threat landscape).

Benefits and harms from AI applications are consequences of the ways we choose to apply these technologies.

“Technology is neither good nor bad, nor is it neutral.” (Kranzberg’s First Law of Technology)

Everything depends on the USE CONTEXT. We must understand what we are trying to protect, with detailed requirements, limitations, and constraints, and verify that actual use does not deviate from intended and approved specifications. That level of understanding of USE CONTEXT is needed for security and safety but also for other critical requirements of responsible AI, from the need for explainability and interpretability in domains like healthcare to universal needs for fairness, non-discrimination, and managing bias. And even though many security controls and procedures might be implemented at the MODEL and PLATFORM layers, the security of an AI application starts and ends in USE CONTEXT.

The visualization in this post is inspired by different diagrams of AI systems, including some from EU or OECD. This is still a work in progress, so please expect it to be updated.

A good summary of where to start thinking about the security of an AI system or proposed system.